Section: New Results

Recognition of Daily Activities by Embedding Visual Features within a Semantic Language

Participants : Francesco Verrini, Carlos F. Crispim Junior, Michal Koperski, François Brémond.

keywords: Activity of Daily Living, RGBD Sensors, Activity Recognition

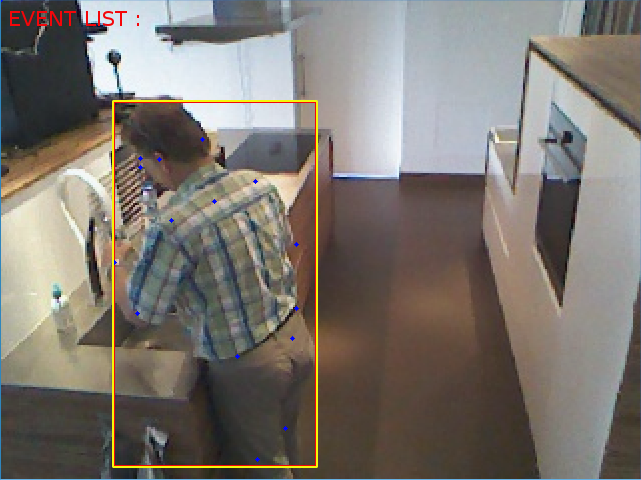

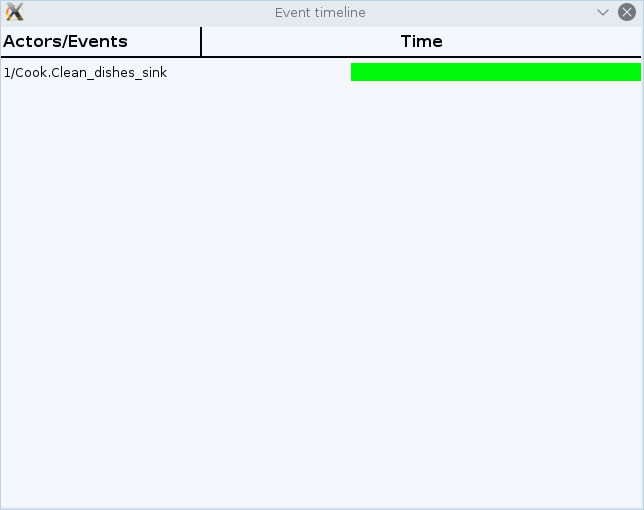

The recognition of complex actions is still a challenging task in Computer Vision especially in daily living scenarios where problems like occlusion and limited field of view are very common. Recognition of Activity Daily Living (ADL) could improve the quality of life and supporting independent and healthy living of older or/and impaired people by using information and communication technologies at home, at the workplace and in public spaces. A method based on the development of a scenario model with semantic logic and a priori knowledge formalism is able to take into account spatio-temporal information of the scene but falls short in identifying finer events occurring in a specific area, e.g. it identifies that the person is sitting but we cannot determine whether the person is only sitting, using a laptop, or watching television. For this method the supervision of experts is also needed [58]. In this method after detection and tracking, event recognition is performed (fig 25). On the other side, action recognition through visual words [83] improves recognition of actions with low amount of motion but contextual information of the scene is not taken into account. The goal of this work is to merge the two methods trying to use the spatio-temporal information of the ontology model to improve the results of the action recognition through visual words. The actions detected with visual words are implemented as Primitive States in the scenario and then used as Components of Composite States to merge them with spatio-temporal pattern that the people display while performing activities of daily living (e.g. Person Inside Zone Sink, Person moving between zone Entry and zone Corridor). In a challenging Dataset such as SmartHome [22] where a high variance intra-class and low variance inter-class is present results for some actions improves in precision and recall thanks to spatial information, e.g. for Clean Dishes the precision with the proposed method increases form 29% to 42% thanks to the definition of a spatial zone for the sink. A drawback of this method is that True Positive can not increase being strictly dependent on machine learning pipeline, for this reason Precision improves due to the less number of False Positive.